VAR and Cointegration Flashcards

(29 cards)

What are the consequences of including unnecessary deterministic terms in the first-step of the Engle-Granger coitnegration testing framework?

You lose power but will still reject if the series are cointegrated The critical value will be more negative that it would be with the correct deterministic terms

Explain the Engle-Granger framework

First test if individual variables are I(1) using ADF. If not, they cannot be cointegrated. Then, test whether the residuals from the first-stage regression are covariance stationary, which is an indirect test of the eigenvalue. Johansen directly tests the eigenvalues.

Write out a general form VAR(P)

What are some recent methods to work with large VARs

LASSO, Machine Learning, Bayesian methods with possibility of imposing structure

Define Vector White Noise

1) Zero unconditonal mean 2) Constant covariance (need not be diagonal) 3) Zero autocovariance May be dependent No restrictions on conditional mean

When is a VAR(1) stationary?

When the eigenvalues of the transition matrix are less than 1 in absolute value and the errors are white noise

What is the effect of scaling variables to unit variance?

It can make the interpretation easier. It has no effect on persistence, inference or model selection

What is companion form

Companion form is when a higher order VAR or AR is twritten as a VAR(1). It simplifies computing the autocovariance function

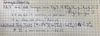

Show how an AR(2) can be written as a VAR(1)

What is a problem with forecasting with VARs?

Fore more steps ahead than 2, residuals are not white noise and follow and MA(h-1) structure.

What are the solutions to autocovariance in forecast errors?

Define Granger-Causality

X does not cause Y if conditioning on past values of X does not change the forecast for Y

How to test for Granger-Causality

Define an Impulse Response Function (IRF)

The IRF of y_i w.r.t. a shock in e_j is the change in y_i,_t+s for s>-0 for a 1 std. shock in ej,t

How do we compute orthogonal shocks and their effect?

We need Sigma^(-0.5)

What are 4 ways of computing Impulse Response Functions?

1) Assume sigma is diagonal 2) Choleski decomposition 3) Generalized IR 4) Spectral decomposition

Explain Choleski decomposition

Assume a causal ordering, so that some variables do not affect others temporaneously. Examples. Nominal variables may react faster than real. No immediate effect of inflation and unemployment on Fed Funds (interest rate)

Explain Generalized IR

Repeated application of Choleski decomposition. Sqrt(sigma) is full square matrix. More general than Choleski decomposition, harder to interpret

Explain spectral decomposition

Again Sqrt(sigma) is full square matrix, no ordering. Based on eigenvalues and eigenvetors.

What are 3 ways of computing IRF confidence intervals?

1) Monte Carlo 1 Estimate parameters, error covariance matrix and the covariance matrix of the parameters Simulate parameter for their assymptotic distribution and compute IRFs Repeat 2) Monte Carlo 2 Estimate parameters and covariance matrix of errors Simulate parameters from their assymptotic distribution and compute IRFs Repeat 3) Bootstrap Estimate parameters and compute residuals Resample residauls with replacement and use this compute new time series Estimate parameters with the new time series and compute IRFs. Repeat

When is a varialbe I(1)

A variable is integrated of order 1 is it is non-stationary but its first differences are stationary

Define cointegration

A set of k variables y are cointegrated if at least two variables are I(1) and there exists a non-zero, reduced rank k-by-k matrix pi such that: pi times y is stationary

Why must pi be reduced rank for variables to be cointegrated?

If pi is full rank and pi times y is stationary, then y must also be stationary. If pi is full rank, it has only non-zero eigenvalues. Hence, phi does not have any unit eigenvalues. So the system contains no unit roots.

Explain the Engle-Granger methodology

Tests for unit roots. Can only test if there is 1 cointegrating relationship. Best for only two variables. Variables must be I(1). Estimate the long-run relationship in a cross-sectional regression on levels. Test if errors are stationary by ADF. If so, the variables are cointegrated