S5 Flashcards

(11 cards)

Is the point (0, 0) a subspace of ℝ2.

Yes

The zero vector is in it; adding any two elements gives another element, since (0, 0) + (0, 0) = (0, 0); and any scalar multiple of an element is an element, becaues c · (0, 0) = (0, 0) for any c ∈ ℝ.

Is the point (2, 3) a subspace of ℝ2.

No

The zero vector is not an element (the other two conditions also fail).

Is the line y = 3x a subspace of ℝ2.

Yes

The zero vector is in it, since 3·0 = 0;

If (a,b) and (c,d) are in it, so b = 3a and d = 3c, then (a+c, b+d) is also in it, because b+d = 3a+3c = 3(a+c); and if (a, b) is in it, then so is (ca, cb) for any c ∈ R, because cb = c · (3a) = 3 · (ca).

Is the line y = −2x+1 a subspace of ℝ2.

No

The zero vector is not in it, because 0 ≠ −2 · 0 + 1 (the other two conditions also fail).

Is the first quadrant: Q1 = {(x,y) ∈ R2 : x,y ≥ 0} a subspace of ℝ2.

No

The third condition fails, take for instance u = (1, 0) ∈ Q1 and c = −1 ∈ R, then cu = (−1, 0) ∉ Q1. (But the other two conditions do hold.)

The union of the first and third quadrants:

Q1 ∪ Q3 ={(x,y)∈ℝ2: x, y ≥ 0 or x, y ≤ 0}.

No

The second condition fails, take for instance u = (1, 0), v = (0, −1) ∈ Q1 ∪ Q3, then u + v = (1, −1) ∉ Q1 ∪ Q3. (But the other two conditions do hold.)

For any vectors v1,…,vn ∈ ℝm, Span(v1,…,vn) is a subspace of ℝm.

True

It is the set of all linear combinations of the vi. The zero vector is a linear combination, if two vectors are linear combinations, then their sum is also a linear combination, and if one vector is a linear combination, then so is any scalar multiple of it.

For A ∈ Rm×n and b ∈ Rm, the set of solutions of A**x **= b is a subspace of Rn.

False

If b ≠ 0, then the zero vector is not a solution (A0 ≠ 0 ≠ b), the sum of two solutions is not a solution (A(u+v) = b+b ≠ b), and a multiple of a solution is not always a solution (A(cu) = cb). (When b = 0, then the set of solutions is a subspace, but the question is if it is true for all b.)

The columns of an n × n invertible matrix form a basis for Rn.

True

By the Invertible Matrix Theorem (or by thinking about row reduction), the columns are linearly independent and they span Rn, so they form a basis.

Here’s how you can see it directly from row reduction: If the matrix A is invertible, then it row reduces to I, which means that the system Ax = b always has exactly one solution, for any b. The fact that it always has at least one solution means that it spans Rn, and the fact that it has at most one solution if b = 0 implies that Ax = 0 has only 1 solution (namely x = 0), which means that the columns of A are linearly independent.

If A = [a1 ···an], then {a1,…,an} is a basis for Col(A).

False

The ai do span Col(A) (by definition), but they need not be linearly independent, and then they would not be a basis. (A subset of them must be a basis, though.)

If A ∈ Rn×n, then every vector in Rn is either in Nul(A) or in Col(A).

False

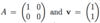

Take for instance A, v = (1, 1). Then Av = (1,0), so v ∉ Nul(A), and Ax = v has no solution (because the matrix is in echelon form and has fewer pivots than variables), so v ∉ Col(A).

(It is true that dim(Nul(A)) + dim(Col(A)) = n, but this does not imply that every vector is in the union of these two subspaces.)