Part 1 (All but hashing) Flashcards

(41 cards)

What is an ADT?

An abstract data type is a specification of a data structure in terms of what we can do with it (its functionality) rather than what it actually is (its specific representation)

The implementation affects (often dramatically) the … of the solution method, but not the …of the solution.

Efficiency

correctness

What is encapsulation?

Ideally the user of an ADT will not need to know, nor be able to influence, the actual representation of data

(hiding data from user and thus preventing alteration)

What are some example ADTs?

- List

- Queue

- Stack

- Dictionary

- Tree

- Graph

What are some example data structures?

- array

- linked list

- binary search tree

- hash table

What are 3 main characteristics of an algorithm designed?

- Domain of definition: set of legal inputs

- Correctness: it gives correct output for each legal input. This depends on the problem we are trying to solve, and can be tricky to prove

- Resource use: usually computing time and memory space.

- This depends on the input, and on the implementation (hardware, programmer skill, compiler, language, …).

- It usually grows as the input size grows.

- There is a tradeoff between resources (for example, time vs space).

- Running time is usually more important than space use.

How running time scales with problem size

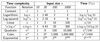

Table: Relative growth of running time T(n) when the input size increases from n = 10 to n = 1000 provided that T(10) = 1.

How problem size scales with running time

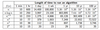

Table: The largest data sizes n that can be processed by an algorithm with time complexity Θ(f(n)) provided that T(10) = 1 minute

Estimating running time: harder example

worst-case versus average-case analysis

a good worst-case bound is always useful, but it is just a first step and we should aim to refine the analysis for important algorithms. Average-case analysis is often more practically useful, provided the algorithm will be run on “random” data

For large enough n, a linear time algorithm will always beat a quadratic time one

true/false?

true

Why do constants need to be taken into account at times?

An algorithm with running time 10−10n 2 is probably better in practice than one with running time 1010n, since the latter will eventually beat the former, but only on instances of size at least 1020, which is rarely met in practice

What can be said about f is O(g)?

“f is Big-Oh of g”

if there is some C > 0 and some n0 ∈ N such that for all n ≥ n0, f(n) ≤ Cg(n)

f grows at most as fast as g

What can be said about f is Ω(g)?

“f is big-Omega of g”

if g is O(f)

f grows at least as fast as g

What can be said about f is Θ(g)?

“f is big-Theta of g”

if f is O(g) and g is O(f)

f grows at the same rate as g

Rules for asymptotic notation

(irrelevance of constant factors)

if c > 0 is constant then cf is Θ(f)

(transitivity)

If f is O(g)/Ω(g)/Θ(g) and g is O(h)/Ω(h)/Θ(h), then f is O(h)/Ω(h)/Θ(h)

(sum rule)

If f1 is O(g1) and f2 is O(g2) then f1 + f2 is O(max{g1, g2})

(product rule)

If f1 is O(g1) and f2 is O(g2) then f1f2 is O(g1g2)

(limit rule)

Suppose that L := limn→∞ f(n)/g(n) exists

Then

- if L = 0 then f is O(g) and f is not Ω(g);

- if 0 < L < ∞ then f is Θ(g);

- if L = ∞ then f is Ω(g) and f is not O(g)

Write f ≺ g if f is O(g) but f is not Θ(g), so g grows at a faster rate than f.

List runtimes

log n ≺ (log n)2 ≺ √ n ≺ n ≺ n log n ≺ n(log n)2 ≺ n2 ≺ n3 ≺ · · · ≺ (1.5)n ≺ 2n ≺ n! ≺ nn

f(n) occurring are log n, n, (n log n), n2 , n3 , 2 n , and each grows considerably faster than the previous one

which 2 have the smallest gap?

gap between n and n log n is the smallest

What is a list and what are some of its basic operations?

A list (or sequence) is an ordered collection of items

basic operations:

- return the size of the list; if this is zero, list is empty;

- retrieve the item in a given position;

- delete an item in a given position;

- insert an item at a given position;

- given the position of an item, find the position of the next item, if it exists (this gives an iterator over the data structure).

How do size and capacity differ?

size is the number of elements in an array

capacity is the total number of places in an array

In what runtime does retrieval, deletion and insertion occur, for a simple array?

Retrieval takes time Θ(1) - (this is notation for constant time)

Deletion takes time Θ(n − i) if the deleted element was in position i

Insertion takes time Θ(n − i) if the inserted element ends up in position i, not counting the case where we need to increase capacity

statically allocated memory is from the…?

and

automatically allocated memory is from the…?

memory heap

and

memory stack (smaller)

what does malloc do?

dynamically allocates memory from the heap

what does realloc do?

resizes the memory required for an object