Parametric Statistics - Dr. Wofford Flashcards

Mean

•Mean: average

- •Sum of a set of scores/number of scores

- •µ= average of a population; x-bar= average of a sample

- •Best measure to use with ratio or interval data

Types of Data

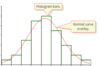

- •Normally distributed data

- •Bell shaped curve

- •Parametric Statistics

- •Nonnormally distributed data

- •Skewed curve

- •Skewed to the left

- •Skewed to the right

- •Nonparametric statistics

- •Skewed curve

3 Post - hoc testing options

- •Different options

- •Tukey (SPSS does for you)

- •Scheffe (SPSS does for you)

- •Most flexible method

- •Bonferroni t-test (by hand, bu tPSS might be able to run for you)

- •Alpha level/# of tests

Three things about ANOVA (suff besides assumptions):

- •ANOVA determines whether the means of >2 samples are different

- •Was developed to prevent Type 1 error from using multiple t-tests

- •ANOVA partitions total variance within a sample (sst) into two sources: a treatment effect (between the groups) and unexplained sources of variation, or error variation, among the subjects (within the groups)

Variability means

The dispersion of scores

Homogeneity of variance

•Homogeneity of variance: relatively similar degrees of variance between groups

- •Levene’s test for homogeneity of variance

- •Want the p value to be >.05

Sum of Squares is squared because:

to get rid of the negative values

Nonnormally distributed data

- Skewed curve

- skewed to the left

- skewed to the right

- Nonprarametric statistics

Prediction

- •Prediction

- •Simple and multiple linear regression

- •Logistic regression

A category of parametrict test

T-Stat for T-Test

- •T-stat= difference in means between groups/variance between groups.

- T-stat = (x-bar2 - x-bar1)/s2

- •Increased t-stat values=increased probability of having a significant result

- •Greater mean difference with less variance equates to a higher t-stat

- •Compare the t-stat to a critical value (located in the appendix) to determine whether it is significant at the predetermined alpha level

- (will produce p-number that can show if results are statistically significant or not)

•Post hoc testing – what is it for, what else is it called?

- Post hoc testing – use to find where the difference lies after you establish there is a significant difference somewhere with ANOVA

- Also called unplanned comparisons

If T-stat is high, _________ is low (or should be?).

p-value

p-value standard should be alpha (0.05 is the usual standard)

ANOVA and t-test are really about the same thing?

yes

T-Test: Test statistic

•Test statistic: t-stat

•ANCOVA:

1 DV, 1 IV, 1+ covariates

- •Covariates must not be correlated

In parametric statistics Every test has _________ and researcher must _______________.

assumptions

meet assuptions of the test

Mean Square

•Mean square (ms): ss/n-1= sample variance

- •Combats the problem of sample size with sum of squares

- •Is called variance and is a true measure of variability

- •Sample variance is annotated as s2

Meaning of ANOVA Assumption:

•Scores are independent- not correlated

They are not too related. its hard to explain without going into too much. You will meet that.

If you have two scores that are similar. It could be that they are correlated, but that hardly ever happens.

We don’t have to worry about this right now.

Three Parametric Statisics terms

- Difference in Groups

- Association/Strength of Relationship

- Prediction

What is the problem with running multiple t-tests

if you run several t-tests, you increase the liklihood of getting a significan result, so you can get a type I error.

So ANOVA was created

•Kolmogorov Smirnov test

a stats test of normality

Range

•Range: Difference between highest and lowest values in a distribution

- •Limited utility when describing variance

T-Test Assumptions

- •Data is measured at least at the interval level

- •Scores are independent- not correlated

- •Normality

- •Homogeneity of variance

- •Independent t-test