L14 - Dealing with Serial Correlation Flashcards

(13 cards)

How can the Durbin-Watson test give an approximate value for the autocorrelation coefficient?

- if DW is near 0 e.g. 0.1 then we should suspect that autocorrelation possibly exists

How does autocorrelation effect the Standard Error of β(hat)?

- the Assumed distribution has the normal equation for variance, thus the variance of β(hat) in this case is the red pdf

- However the true distribution that is subject to serial correlation, has the second variance, giving the blue pdf

- the regression coefficient isnt biased as E(β(hat))= β (the mean of the sample is centered around β)

- but the is actually a bias in the SE of the equation causing more variance in the value of β(hat) –> overestimating the true variance as autocorrelation coefficents are of the same sign

What happens to the OLS pdfs when the autocorrelation coefficients have opposite signs?

- negative signs actually underestimates the true variance

Why is biasness in the SE a problem?

- effects the efficiency of the test

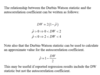

What is the difference between ρ(hat) and Φ(hat)?

ρ(hat) –>first order autcorrelation coefficient for the equation residuals

Φ(hat) –> first order autocorrelation coefficient for the X value

- if you can find these values from the correlogram, you can sub them in the autocorrelation variance equation to get a coefficient for how much larger/small the true variance is than the estimate variance e.g. 8 would mean it is 8 times larger

How do we correct serial correlation?

- Non-Linear Least squares is used to correct for the presence of serial correlation

- Used to simultaneously estimate β(slope coefficient) and ρ (autoregressive parameter) –> have to use NLS as OLS can only be used to find out one parameter

- To get the NLS equation sub the first equation using last period (t-1) into the second equation

- Athough we have 3 variables, we now only have two coefficients

What is the Non-Linear Least Squares Formula?

- cannot take derivatives like in OLS

- do not need to worry about the Newton method –> software package does it just need to remember the matrix

On a graph, what might indicate there is autocorrelation?

When there is a trend in the residual and it only changes signs a few times –> when using OLS and normal regression equation

- most likely positive autocorrelation

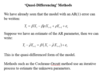

What is the Quasi-differencing method?

- like NLS, they are both mechanical ways of solving auto-correlation, if we already know the autoregressive parameter ρ

How is the iterative method used in conjuction with the quasi-differencing method to find the AR coefficient?

- can get the estimate for ρ(hat) from the Durbin Watson test

What is Common Factor Restriction?

Some econometricians have argued against the use of mechanical corrections for autocorrelaiton on the grounds that it imposes untested restriction

How do you test for Common Factor Restriction?

- This is used to see whether or not you can use NLS or Quasi-differencing methods, to see if the restrictions they impose can be used to solve for autocorrelation

What happens when we omit a variable that is serially correlated?

- If we omit a variable from a regression equation that should be included, and that variable is itself serially correlated, the result will be a serially correlated error.

- In this case OLS will be biased, inefficient and the standard errors of the coefficients will be unreliable.

- There is little we can do in this case other than go back and respecify the model from the start.

- Note that if this is the cause of serial correlation then inference based on ‘corrections’ for serial correlation becomes unreliable