Chapter 5: Joint Probability Distributions Flashcards

(65 cards)

Joint Probability Mass Function for Discrete Variables

Joint Probability Mass Function for Continuous Variables

Joint Probability Density Function for Continuous Variables

Example of Joint Probability Density

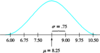

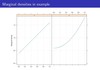

Marginal Densities in example

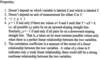

Independent Random Variables

Independent Random Variables Explained

- The definition says that two variables are independent if their joint pmf or pdf is the product of the two marginal pmf’s or pdf’s.

- Intuitively, independence says that knowing the value of one of the variables does not provide additional information about what the value of the other variable might be.

- Independence of X and Y requires that every entry in the joint probability table be the product of the corresponding row and column marginal probabilities.

- Independence of two random variables is most useful when the description of the experiment under study suggests that X and Y have no effect on one another.

- Then once the marginal pmf’s or pdf’s have been specified, the joint pmf or pdf is simply the product of the two marginal functions. It follows that

Conditional Distributions

Expected Value

Covariance

- Thus, the covariance of and provides a measure of the degree to which two variables X and Y tend to “move together”:

- a positive covariance indicates that the deviations of X and Y and from their respective means tend to have the same sign;

- a negative covariance indicates that deviations of X and Y from their respective means tend to have opposite signs.

Correlation

Correlation Coefficient

Properties of the Correlation Coefficient

Joint PMF of Two Discrete Random Variables

Marginal PMF of Two Discrete RVs

Example: Joint Probability Distribution of 2 Discrete RVs

Example: Joint Probability Distribution of 2 Discrete RVs (contd.)

Finding Marginal Distributions from Previous Example

Two Continuous Random Variables

If A is the two-dimensional rectangle

If A is the two-dimensional rectangle

Example 3

Example 3 contd.