Stats 6 - Non-Linear Models Flashcards

(31 cards)

What is characteristic of Linear models?

- All the co-efficients/parameters (β0, β1, β2) in a linear model are linear –> simple

- The data can be fitted with the Ordinary Least Sqaures (OLS) Solution –> minimizing sum of the residuals

Example shown below

- Note that even the last example with eB0 is linear as it is a constant term

How can we characterise Non-Linear Models?

A non-Linear model is not linear in the parameters

Examples of Non-Linear models

In all of these examples, at least one parameter is non-linear (xiβ2)

When trying to fit a Linear model to our data, how do we decide what’s best?

Least Squares Solution!

Can we apply the Least Squares solution to a Non-Linear Model?

No! –> It does not work!

Why do we care about Non-Linear models in the first place?

Many observations in biology are not well-fitted by linear models –> the underlying biological phenomenon is not well described by a linear equation

Examples:

- Michaelis-Menten Biochemical Kinetics

- Allometric growth (growth of two body parts in proportion to each other),

- Response of metabolic rates to changing temperature

- Predator-prey functional response

- Population growth

- Time-series data (sinusoidal patterns)

Non-Linear model Example – Temperature and Metabolism

Enzyme responsible for Bioluminescence is very temperature dependent –> captured by modified Arrhenius equation

So how do we fit data when with a Non-Linear Model?

We can use a computer to find the approximate but close-to-optimal least squares solution!

- Choose starting values –> guess some initial values for the parameters

- Then adjust the parameters iteratively using an algorithm –> searching for decreases in RSS

- Eventually, end up with a combination of β where the RSS is approximately minimized.

Note –> Better if your guess of initial parameters is closing to the global minimum

Outline the general procedure of fitting Non-Linear Models to data

General Procedure

- Start with an initial value for each parameter

- Generate a curve defined by the initial values

- Calculate the RSS

- Adjust the parameters to make the curve fit closer to the data (Minimize sum square of residual) - Tricky part

- Adjust the parameters again…

- Iterative process –> repeat steps 4+5

- Stop simulations when the adjustments make virtually no difference to RSS

What are the two main types of Optimizing Algorithms used when adjusting parameters to minimize RSS?

- Gauss-Newton algorithm is often used but doesn’t work well if the model to be fitted is mathematically complicated (parameter search landscape is difficult), plus furthermore it does not help if the values for parameters that you have inputted are far from optimal

- Levenberg-Marquardt –> algorithm that switches between Gauss-Newton and “gradient descent” (Helps decide which direction to take in a complicated landscape) –> more robust against starting values that are far from optimal and is more reliable in most scenarios.

What should you do when your Non-linear model has been fitted?

Once NLLS fitting is done, you need to get the goodness of fit measures –> Is the model representative?

- First, we assess the fit visually

- Report the goodness of fit results:

a) Residual Sum of Squares (RSS)

b) Estimated co-efficients

c) For each co-efficient, we can present the confidence intervals (How confident we are that the co-efficient is between a specific range), t-statistic and the corresponding (two-tailed) p-value - You may also want to compare and select between multiple competing models

Note –> Unlike Linear models, R2 should NOT be used to interpret the quality of an NLLS fit.

What are the NLLS assumptions?

NLLS has the all the same assumptions as Ordinary least square regression.

- No/minimal measurement error in the explanatory variable

- Data have constant normal variance –> errors in the y-axis are homogenously distributed over the x-axis range

- The measurement/observation errors are normally distributed (Gaussian)

- Observations are independent of eachother

What happens if our error in our Non-Linear model are not normally distributed?

But what happens when the errors are not normal?

We have to interpret the results cautiously and use maximum likelihood or Bayesian fitting methods instead

What algorithm is normally used in R?

When using the nls() function –> Gauss-Newton algorithm is used

But for the Levenberg-Marquardt (LM) algorithm –> nlsLM() –> we require the installation of a package - minpack.lm

It offers additional features like the ability to “bound” parameters to realistic values

Outline the Coefficients in the Michaelis Menten equation.

Co-efficients

- Vmax –> Maximum rate of reaction –> occurs at saturating substrate concentration

- Km –> Substrate concenttation at Vmax/2 –> indication of affinity –> High = Low affinity/Low = High affinity

Km will dictate the overall shape of the curve –> does it approach Vmax quickly or slowly?

We have to remember that Vmax and Km have to be greater than zero –> important when picking starting values

How to set up a Michaelis Menten model on R?

MM_model <- nls(V_data ~ V_max * S_data / (K_M + S_data))

V_data –> Rate of reaction

S_data –> Substrate of reaction

When trying to fit a Non-Linear model on R, what will R do if you don’t input starting parameters/Coefficients?

For nls models you need to provide starting values for the parameters

If non are given then it will set all parameters to ‘1’ and work from there –> For simple models, despite the warning, this works well enough.

After fitting you Michealis Menten Model what should you do?

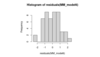

Hint - Look at image

- First Step is to visualize how well the model fit the data

Create Plot

plot(S_data,V_data, xlab = “Substrate Concentration”, ylab = “Reaction Rate”)

Input Trendline from Model

lines(S_data,predict(MM_model), lty=1, col=”blue”, lwd=2)

- After plotting, gather some information using summary()

Estimates –> Estimated values for the Co-efficients (Vmax and Km)

Estimate/Std. error = t-value which has a given Pr(>|t|) –> T-Test to test for the statistical significance of the obtained estimate value

Number of iterations –> Number of times the NLS algorithm had to adjust the parameter values to find the minimal RSS solution.

Achieved Convergence tolerance –> tells you on what basis the algorithm decided it was close enough to the solution –> basically if the RSS does not improve past a certain point despite adjusting parameters the algorithm stops searching.

What are the main differences between lm and nls summary() output?

Difference between LM and NLM summary output?

Generally, the same format except for…

The last two rows are specific to an NLS output

- Number of Iterations

- Acheived convergence tolerance

Why they are included?

NLLS is not an exact process, it requires computer simulations.

Normally, the last two rows are not reported BUT they can be useful in solving problems if the fitting does not work

What is a quick way to obtain the co-efficient values from a nlm?

You can quickly obtain the Coefficient values from your NLM using the following code…

coef(MM_model)

Can a ANOVA be performed on a Non-Linear model?

NO! –> ANOVA cannot be performed on a non-linear model

How can you obtain confidence intervals for Co-efficient perdictions of a nls model? What can they be used for?

One very useful thing you can do after NLLS fitting you can calculate/construct confidence intervals (CI’s) around the estimated parameters/coefficients

Use the following Code - confint(MM_model)

It can be used for…

- The CI’s can be used to test whether the coefficient estimate is significantly different from a reference value

- It can also be a quick way to test whether coefficient estimates from the same model with another population sample have statistically different coefficients

In either case…

If the ranges overlap -> They are not statistically different

If the ranges do NOT overlap -> They are statistically different

Image –> Shows us that we are 95% certain that our co-efficient is located between these numbers

Are R2 values obtained from a Non-Linear model reliable?

R2 values obtained from a Non-Linear model ARE NOT reliable, and thus should not be used

They don’t always accurately reflect the quality of the fit and can definitely not be used to select between competing models

How can we tell R to start with specific coefficients for a non-linear model?

MM_model2 <- nls(V_data ~ V_max * S_data / (K_M + S_data), start = list(V_max = 12, K_M = 7))

Example –> Include start = list (… , …)

Note –> When selecting starting number make sure they are sensible and make biological sense

Does using different starting values impact the final co-efficient?

YES!

Example below for Michaelis Menten Non-Linear model

- Co-efficients both set to one

- Co-efficients - V_max = 12 and K_M = 7

- Co-efficients - Vmax=0.01 and Km=10

A look at the different outputs!

What happens when you using starting values that are too far from their actual value?

If you provide values that are VERY far from the optimal you will receive an error message –> e.g. Singular gradient matrix at initial parameter estimates error

Takeaway message –> NLLS model fitting is NOT an exact procedure.

But given that you provide starting values that are reasonable, NLLS is exact enough