Questions from tests Flashcards

(62 cards)

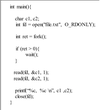

SWhat is the lesson from this question?

we must set the interest field to false before we allow the access of other threads in the CS.

- What is the lesson from this question?*

- (solving starvation)*

- Recall: this case does not involve a simple order between threads. It demonstrates the producer-costumer problem. Once a mass of readers read, the writer must find itself starved to death.*

- How is it solved?*

Another mutex!! we wrap the mutex which is used in order to get into the CS in another mutex. That is, all of the “Readers” are now waiting on the additional mutex, and not on the main mutex.

One-way + barrier of 5 threads.

That is, the barrier maintains the demand that exactly 5 vehicles pass the tunnel and additional 5 might enter only after all of them has left the tunnel.

It is done by adding a new mutex which is responsible of having the first 4 vehicles wait for the fifth to come. Once the fifth comes, it releases them.

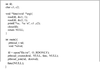

NOTE! DOWN(busy) comes after UP(mutex). That is super important becuase the release of busy is placed after the acquisition of mutex.

In order words, if i sleep on busy while holding mutex, i create a deadlock =[

How can we implement FIFO waking order?

The attached algorithm with the addition of wrapping mutex around flag = 0; sleep;

- How many bytes a second-level page table can point to?*

- How many pages are required for a 12MB process?*

- what are the key point in this question?*

There are 2^10 page directory entries, each of them refers to a page table with 2^10 page table entries.

Each page table entry referes to a base address of a physical page-frame, which can hold 2^12 addresses.

In total, 2^10*2^10^2^12 = 4*2^30 = 4GB of bytes.

Each pde refers to 2^10*2^12 =4*2^20 = 4MB.

ket point: when we think of the number of pages need to be allocated to memory for some program, we should first ask ourselves how many first-level pages we need. According to that, we shall add the number of second-level pages that will satisfy such an amount. Them combined should be the answer. For a 12MB process, we need 3 first-level pages. to provide these for also need 1 second-level page. In total - we need 4 pages.

Find the given address: 0x00403004

- 0x00403004 = 4 + 3*16^3+ 4*16^5 = 4,206,596*

- We know that starting from p1[0], we have 2^22 bytes. That is, [0,4,194,303]*

p1[1] = [4,194,304,8,388,607] (address is here)

- 4,206,596* - 4,194,304 = 12292

- Each entry in p2 has 2^12 bytes.*

- p2[0] - [0,4095]*

- p2[1] - [4096, 8191]*

- p2[2] - [8192, 12287]*

p3[3] - [12,288, 16383] (address is here)

12292 - 12288 = 4.

p1 = 1, p2 =3 ,p3 = 4

- In a two-level page table, how many accesses to memory are required to reach a paged memory reference if:*

- page is on memory*

- There is TLB , page on memory but is not on TLB*

- 3.There is TLB and page is found there.*

1. Answer is: 3 accesses

- Get page directory

- get page table

- get page + offset

- Answer is: 3 accesses

- Answer is: 1 access

A computer has an address space of 32 bits, and page size of 2KB, first level:

- What is its virtual memory size?

- What is the size of the page table?

- What is the maximal size of a program’s memory? does it depend on the size of the pages?

- 2^32.*

- We can divide the memory into 2^32/2^11 = 2^21 pages.*

- The page table is constructed of PTEs. There are 2^21 of such, each of size 4 bytes. Therefore, the overall size of the page table is 2^21*2^2=2^23 = 8MB.*

- The maximal size is the size of the virtual address, AKA 4GB. it has nothing to do with the page size.*

Assume an address space of 32 bits, 2KB size pages and a two-level page table, in which 8 bits are dedicated for the first level table.

What is the size of the 2nd table?

Virtual address - 2^32

- 8 bits for first level(2^8 PDEs)

- 11 bits for address(2^11 addresses in a page)

- 13 bits for second level(2^13 PTEs)

*2^13* 4 = 2^15 =32KB*

- What is the size of a single level page table on a 32 bit machine, with 4KB pages?*

- What is the size of a 64 bit machine’s page table with 4KB pages?*

- How many layers will we need if we want to ensure that each page table entry will require only a single page?*

- 2^32 addresses which are spread accross pages of size 4KB.*

- 2^32/2^12 = 2^20 PTEs.*

*That is, 2^20*4 = 2^22 = 4MB*

- 2^64 address which are spread accross pages of size 4KB.*

- 2^64/2^12=2^52*

That is, 2^52*8 = 2^55. 32 Peta Bytes

How many pages are there in one page table?

2^12/2^3 = 2^9.

- In order to “spread” 2^52 address to a specific PTE we need 52/9 layers, which is 6 layers.*

- In other words, we need to have 6 accesses to memory to retrieve each virtual address.*

- How many time will the disk be accessed when a user executes the following command:*

- more /usr/tmp/a.txt*

- Assume that:*

- 1.The size of ‘a.txt’ is 1 block.*

- 2.The i-node of the root directory is not in the memory.*

- 3.Entries ‘usr’, ‘tmp’ and ‘a.txt’ are all located in the first block of their directories.*

- 4.Ignore the disk access required in order to load more*

- So, how does it work?*

- Each struct file holds an inode, which holds a copy of dinode. since the root directory is not on memory, we need to find dinode of the root(first access), there, we are told to go to the first block(second access), to reach user, same process again to reach temp and the same to reach a.txt.*

- We reach a.txt after 6 accesses.*

- The more command displays the file content, since it is of size 1 block, we reach the inode, and then reach the block(2 accesses)*

- overall: 8 accesses.*

The Ofer2000 Operating Systems, based on UNIX, provides the following system call:

rename(char *old, char *new)

This call changes a file’s name from ‘old’ to ‘new’. What is the difference between using this call, and just copying ‘old’ to a new file, ‘new’, followed by deleting ‘old’? Answer in terms of disk access and allocation.

In terms of allocation:

- we may have a situation where we don’t have enough space for a new allocation using copy+delete.

- changing the location involves updating the inodes table in the kernel.

in terms of disk accesses:

- changing a name does not involve any disk access. It is on the user-level.

- copying old to new requires reaching the source inode, then accessing each one of its blocks and copying them to the blocks of another inode. That is, a lot of accesses.

What would be the maximal size of a file in a UNIX system with an address size of 32 bits if :

- The block size is 1K

- The block size is 4K

- The i-node has 10 direct block entries,*

- single, double & triple indirect*

- virtual address: 2^32*

- block size is 1K:*

- 10 direct blocks - 10K

- single indirect - 256K - 2^8K

- double indirect - 256^2 - 2^16K

- triple - 256^3 - 2^32K

- total:

block size is 4K:

- 10 direct blocks - 40K

- single indirect - 1024K - 2^10K*4

- double indirect - 1024^2 - 2^20K*4

- triple - 1024^3 - 2^30K*4

- Recall two fundamental points about fork and files:*

- Fork creates for the child a reference to the files(struct file)* of the parent.

- Because parent and child share a reference:

- Fork creates for the child a reference to the files(struct file)* of the parent.

- The file is removed only when both call the close command.

- Both respond to the same offset.

very important points regarding threads:

- When a thread opens a file, all other threads have access to that file, but, they the ref count of the struct file is 1. That is, in order to close the file only one thread shall call close.

- all global variables are shared.

- what can a soft link provide?*

- what can a hard link provide?*

- It can provide merely the name of the path, and thus the name of the files. It has nothing to do with the inode of the files.*

- hard-link is a creation of a new file struct. It does not hold the name of the file*

- The task of saving names of files is given to the dirent struct*. Hard-link of a file has nothing to do with its name

What are the steps of finding /usr/ast/mbox

- root directory is on memory with the dirent structs it holds.*

- we find usr, there we see its attributes and a list of its blocks. Within the blocks we search the dirent with the name “ast” and proceed in the same manner.*

Does it matter how many references a page had with FIFO?

With FIFO it does not matter how much a page has been referenced. All matters is when it was first loaded in the memory.

The the optimal page replacement is not realistic?

- Because it is based on an idea that we know which pages are going to be requested, and according to that, we choose the pages in memory.*

- Thing is, we don’t know.*

- A computer has a virutal address of 38 bits.*

- Each page-frame is of size 16KB*

- The system is managed by a two-level page table, where the first-level page hold one page, and the size of each page table entry is 4 bytes.*

- How much bits of the virtual address should be assigned to the first-level page and the second-level page*

When we’re told the page-frame is of size 16KB, we can be sure the “offset” part is constructed of 14 bits. (2^14 = 16KB)

Now, if the first-level page size is 16KB, and each PTE is of size 4B, then the second-level page has 2^14/2^2 entries. That is, its offset is constructed of 12 bits.

In total. Second level - 12 bits. offset - 14 bits.

Because the address is of 38 bits, 12 bits construct the first-level page

- If the block size increases, the size assigned to the page table increases/decreases?*

- and will the chance for TLB hit increases?*

- will page-fault treatment be longer?*

- will inner fragmentation decrease?*

Decreases, because it sure has now less entries.

Yes, because the memory is more “dense”, instead of being spread over x page, it is now spread over y pages, when y<x.></x.>

Yes, because copying from disk to memory takes longer.

No, it most probably increase because the minimum size for allocation is now bigger, thus requests for allocation of smaller chunks will hold a much bigger space than they need.

- what does namex do?*

- what does dirlookup do(ip,name)?*

Given a path, the function looks for the relevant inode and retruns its number. If not found returns 0.

dirlookup searches within disk, in inode ip, in jumps of “struct dirent”, the direct which has the name of name, if found, it returns its inode.

- what iget(dev, inum) do?*

- what idup(struct inode*) do?*

what is the difference between link and ref in the inode struct

Find the inode with number inum on device dev

and return the in-memory copy. Does not lock

the inode and does not read it from disk.

- increases ref by 1, and returns the ip.*

- ref uses the current source, so it lets him know that someome is using it so even if you’re told to close the file - don’t.*(OS level)

- link asks to duplicate the source, to have additional dirent entry in another directory.* (Disk level)

What if during the execution context-switch between two threads of different processes, the TLB will not flush?

- Each process has its own unique mappings from virtual to physical.*

- After context-switch, the virtual address of the thread of the new process, has nothing to do with the virtual address of the previous process. That is, if we don’t flush, a given address may lead to a physical address of the previous process instead of the new.*