Midterm 1: Week 1 to 6 Flashcards

What is an intelligent robot?

Intelligent robots are devices made of hardware and software.

The primary structure of intelligence in a robot is the sense-plan-act loop that allows reaction to the surrounding world.

Sense-plan-act loop

Sense: Gather information

Plan: Take the information and come up with a series of behaviours to do something

Act: perform the plan

State Space

Set of all physical situations that could arise. It captures the meaningful properties of the robot.

Notice that determining the correct representation of the state space is the #1 task of a roboticist.

Action Space

The commands, forces or other effects it can take to change its own state or that of the environment.

Notice that the action space can be state-dependent.

Kinematics

Considers constraints (limitations) on the state space and how we can transition between states.

A more formal definition is the following:

Kinematics describes the motion of points, bodies (objects), and systems of bodies (groups of objects) without considering the forces that cause them to move.

Dynamics

Consider idealized physics of motion.

- Forces

- Control

Model

A function that describes a physical phenomenon or a system. Models are useful if they can predict reality up to some degree.

Mismatch between the model prediction and reality is called noise/ error

Omnidirectional Robots

Robots that can move in any direction without necessitating to rotate.

State of an Omnidirectional Robot

Control of an Omnidirectional Robot

Notes on Inertial frames of reference

G the global frame of refrence is fixe i.e. with zero velocity in our previous example.

- If a robot’s position is known in the global frame, life is good!

- I can correctly report its position

- We can compute the direction and distance to a goal point

- We can avoid collisions with obstacles also known in global frame

Kinematics as Constraints

Notice that the idea of using vector spaces or set to represent everything that can happen is too broad to capture robot details.

A common form of kinematics is to state a ser of constraints also. These can be on the allowable states or how to move between states.

Kinematics of a simple car

State

Kinematics of a simple car

Control

Kinematics of a simple car

Velocities

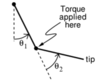

Instantaneous Center of Rotation

The centre of the circle circumcises by the turning path.

Undefined for straight path segments.

The Dubins Car Model

Visualize a car that can only move forward at a constant speed s and with a maximum steering angle

First Robotics Theorem

The shortest paths of the Dubins Car can be decomposed into:

L: max left turn

R: max right turn

S; zero turn

*This rules out to ever turn partially

Motion Primitives

The fixed motion types: L, R, S, are called primitives. We can use them to decompose a series of motions to reach a goal.

Dubins Airplane

State of a Unicycle

Notice, here the state is the same as for the car and the omnidirectional robot since here we are just interested in the Global position of the unicycle.

x = [G_p_x, G_p_y, G__(teta)]

Controls of a Unicycle

We consider the angular vellocities on y and z.

On z it is how fast the wheel turns. On y it is how much it turns on the plane.

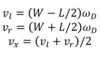

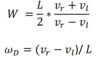

Recall that the control set of a unicycle includes the angular velocities on y and z. How do you calculate the velocities of an unicycle?

State of a different drive vehicle

Same as the car, omnidirectional robot and unicycle