Machine Learning Flashcards

source: https://www.edureka.co/blog/interview-questions/machine-learning-interview-questions/ (45 cards)

A/B Testing

A/B is Statistical hypothesis testing for randomized experiment with two variables A and B. It is used to compare two models that use different predictor variables in order to check which variable fits best for a given sample of data.

Consider a scenario where you’ve created two models (using different predictor variables) that can be used to recommend products for an e-commerce platform.

A/B Testing can be used to compare these two models to check which one best recommends products to a customer.

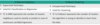

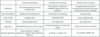

Bagging vs Boosting

Classification vs Regression

Classification:

- Predicting discrete class/label

- Binary and multi-class classification

Regression:

- Predicting continuous quantity

- multi-input regression is called multivariate regression

Cluster Sampling

It is a process of randomly selecting intact groups within a defined population, sharing similar characteristics.

Cluster Sample is a probability sample where each sampling unit is a collection or cluster of elements.

Collinearity and Multicollinearity

Collinearity occurs when two predictor variables (e.g., x1 and x2) in a multiple regression have some correlation.

Multicollinearity occurs when more than two predictor variables (e.g., x1, x2, and x3) are inter-correlated.

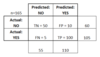

Confusion Matrix

A confusion matrix or an error matrix is a table which is used for summarizing the performance of a classification algorithm.

Gini Impurity vs Entropy in a Decision Tree

- Gini Impurity and Entropy are the metrics used for deciding how to split a Decision Tree.

Gini measurement is the probability of a random sample being classified correctly if you randomly pick a label according to the distribution in the branch.

Entropy is a measurement to calculate the lack of information. You calculate the Information Gain (difference in entropies) by making a split. This measure helps to reduce the uncertainty about the output label.

How Decision Tree node is split

- Measures such as, Gini Index and Entropy can be used to decide which variable is best fitted for splitting the Decision Tree at the root node.

- We can calculate Gini as following:

- Calculate Gini for sub-nodes, using the formula – sum of square of probability for success and failure (p^2+q^2).

- Calculate Gini for split using weighted Gini score of each node of that split

- Entropy is the measure of impurity or randomness in the data

Entropy Vs Information Gain

Entropy is an indicator of how messy your data is. It decreases as you reach closer to the leaf node.

The Information Gain is based on the decrease in entropy after a dataset is split on an attribute. It keeps on increasing as you reach closer to the leaf node.

Eigenvectors and Eigenvalues

Eigenvectors: Eigenvectors are those vectors whose direction remains unchanged even when a linear transformation is performed on them.

Eigenvalues: Eigenvalue is the scalar that is used for the transformation of an Eigenvector.

Ensemble learning

Ensemble learning is a technique that is used to create multiple Machine Learning models, which are then combined to produce more accurate results. A general Machine Learning model is built by using the entire training data set.

However, in Ensemble Learning the training data set is split into multiple subsets, wherein each subset is used to build a separate model. After the models are trained, they are then combined to predict an outcome in such a way that the variance in the output is reduced.

True Positive

False Positive

False Negative

True Negative

- True Positive:

- False Positive:

- False Negative:

- True Negative:

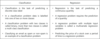

Better False Positives vs False Negatives?

It depends on the question as well as on the domain for which we are trying to solve the problem.

- If you’re using Machine Learning in the domain of medical testing, then a false negative is very risky, since the report will not show any health problem when a person is actually unwell.

- Similarly, if Machine Learning is used in spam detection, then a false positive is very risky because the algorithm may classify an important email as spam.

Inductive vs Deductive learning

Inductive learning is the process of using observations to draw conclusions

Deductive learning is the process of using conclusions to form observations

KNN vs K-Means

KNN:

- Supervised Learning model/technique

- Classification or regression

- K is number of label to predict

K-Means:

- Unsupervised Learning/technique

- Clustering (or grouping)

- K is the number of clusters to identify/learn from the data

Python libraries for Data Analysis

- NumPy

- SciPy

- Pandas

- SciKit

- Matplotlib

- Seaborn

- Bokeh

Deep Learning vs Machine Learning

- Machine Learning is all about algorithms that parse data, learn from that data, and then apply what they’ve learned to make informed decisions.

- Deep Learning is a form of machine learning that is inspired by the structure of the human brain and is particularly effective in feature detection.

Types of Machine Learning

- Supervised Learning - uses labeled data

- Unsupervised Learning - uses unlabeled data

- Reinforcement Learning - actions oriented, uses rewards and penalties system

Dealing with Missing Value

4 ways:

- Drop feature/column - if 95%+ of the data of the feature is missing

- Drop rows - if 5%- of the data is missing, it is safer to drop the rows

- Use flag indicator - if between 50%-95% of the data is missing, replace column/feature with flag indicator(e.i. something that say missing or not missing)

- Imputation - if less than 50% of the data is missing, imputate using method best suiited for this project:

- mean, mode, median

- replace missing values based on ration/distribution

- replace missing values using model prediction

Suppose you are given a data set which has missing values spread along 1 standard deviation from the median. What percentage of data would remain unaffected and Why?

Since the data is spread across the median, let’s assume it’s a normal distribution.

As you know, in a normal distribution, ~68% of the data lies in 1 standard deviation from mean (or mode, median), which leaves ~32% of the data unaffected. Therefore, ~32% of the data would remain unaffected by missing values.

You are given a cancer detection data set. Let’s suppose when you build a classification model you achieved an accuracy of 96%. Why shouldn’t you be happy with your model performance? What can you do about it?

Might not have been trained properly.

You can do the following:

- Add more data

- Treat missing outlier values

- Feature Engineering

- Feature Selection

- Multiple Algorithms

- Algorithm Tuning

- Ensemble Method

- Cross-Validation

Suppose you found that your model is suffering from low bias and high variance. Which algorithm you think could tackle this situation and Why?

Type 1: How to tackle high variance?

- Low bias occurs when the model’s predicted values are near to actual values.

- In this case, we can use the bagging algorithm (eg: Random Forest) to tackle high variance problem.

- Bagging algorithm will divide the data set into its subsets with repeated randomized sampling.

- Once divided, these samples can be used to generate a set of models using a single learning algorithm. Later, the model predictions are combined using voting (classification) or averaging (regression).

Type 2: How to tackle high variance?

- Lower the model complexity by using regularization technique, where higher model coefficients get penalized.

- You can also use top n features from variable importance chart. It might be possible that with all the variable in the data set, the algorithm is facing difficulty in finding the meaningful signal.

How do you map nicknames (Pete, Andy, Nick, Rob, etc) to real names?

- This problem can be solved in n number of ways. Let’s assume that you’re given a data set containing 1000s of twitter interactions. You will begin by studying the relationship between two people by carefully analyzing the words used in the tweets.

- This kind of problem statement can be solved by implementing Text Mining using Natural Language Processing techniques, wherein each word in a sentence is broken down and co-relations between various words are found.

- NLP is actively used in understanding customer feedback, performing sentimental analysis on Twitter and Facebook. Thus, one of the ways to solve this problem is through Text Mining and Natural Language Processing techniques.

You’re asked to build a random forest model with 10000 trees. During its training, you got training error as 0.00. But, on testing the validation error was 34.23. What is going on? Haven’t you trained your model perfectly?

- The model is overfitting the data.

- Training error of 0.00 means that the classifier has mimicked the training data patterns to an extent.

- But when this classifier runs on the unseen sample, it was not able to find those patterns and returned the predictions with more number of errors.

- In Random Forest, it usually happens when we use a larger number of trees than necessary. Hence, to avoid such situations, we should tune the number of trees using cross-validation.