Decision Trees Flashcards

(23 cards)

What is the ID3 algorithm?

Split (node, {example}):

- A

- For each value of A create child node

- Split training {example}’s to child nodes

- For each subset Split (child_node, {subset}) until subset is pure

What is the definition of Entropy?

H(S) = -p(+) log2 p(+) - p(-) log2 p(-)

Where p(+) and p(+) are % positive/negative examples in S

How can we interpret entropy?

How many bits needed to tell if X is positive or negative

How do we compute the expected drop in entropy (gain)?

What does gain tell us?

Average entropy after the split, biased to larger subsets

What is infomation gain?

Difference between the entopy before the split and after the split

What does infomation gain tell you?

How much more certain you are after a split

What happens if you run ID3 to completion?

All subsets will be pure

How to Decision trees overfit?

Running ID3 to completion, end up with lots of singleton subsets, not alot of confidence in estimates (only 1 traning example).

How can we avoid overfitting (decision trees)?

- Create full DT

- For each node

- Remove

- Measure performance on validation set

- Remove node that results in greatest improvement

- Repeat until accuracy drops

How is split entropy defined?

What do we use split entropy for

Normalize infomation gain by how fine grained the split is

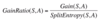

Definition of gain ratio?

What does GainRation penalize?

Attributes with many values

What is the problem with infomation gain?

Biased towards attributes with many values (they create lots of small pure subsets)

Whats unique about DT?

Not a black box, you can interpet rules of the tree

How can we expland DT to multi class?

- Predict most freq class

- Generalize entropy: H(S) = -Σc p(c) log2 p(c) where p(c) is % of class c in S

How can we expand DT to regression?

- Predict average of examples in subset (or use linear regression)

- Minimize variance in subsets

Whats are the pros of DT?

- Interpretable

- Easly handles irrelevant attributes (gain = 0)

- can handle missing data

- very compact

- very fast testing time: O(depth)

What are the cons of DT?

- Only axis-aligned splits of data

- Greedy (may not find best tree

- Exponentially many possible trees

How do you create a random decision forest?

- Grow K different decision trees

- Pick a random subset Sr of training examples

- Grow a full ID3 tree Tr

- Pick from a subset d << D random attributes

- Compute gain based on Sr

- Repeat for r = 1…k

How do you classify an example with random forrests?

- Classify each X using trees T1 to Tk

- Use majority vote

What does entropy measure?

How pure/inpure a subset is