Data Structures Flashcards

(130 cards)

What are data structures?

the elementary particles of algorithms, data structures are woven into the fabric of computer science and are essential building blocks of many coding solutions.

True or False

Data structures can be boiled down to the manipulation of data

True

manipulating data involves structuring that data.

What is complexity analysis?

A single coding problem may have multiple solutions using different data structures, they are not all equal (ie time vs space)

During a coding interview, when asked (about your code) “can you improve it and do better” is usually referring to?

complexity analysis

Did you choose the most efficient data structure (nested for loop over a hash table) or did the question place more emphasis on space vs execution time?

What is space-time complexity?

time: how fast algorithm runs

space: how much memory an algorithm is utilizing

all depends on the use-case

True or False

Memory is a bounded type of storage

True

memory is finite (limited)

bits are?

8 bits is a?

binary 0 and 1’s (base 2)

byte

True or False

pointers can be allocated in memory as well

True

and pointers aren’t memory intensive and don’t have to be in continuous memory.

True or False

Accessing a memory address is just about instantaneous?

True

True or False

32bit (fixed-width) is 4 bytes memory

True

True or False

64bit (fixed-width) is 8 bytes memory

True

A single byte can represent up to ___ data values

256

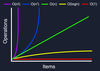

Constant time is represent as?

O(1)

if the value of T(n) is bounded by a value that does not depend on the size of the input. ie, accessing any single element in an array takes constant time as only one operation has to be performed to locate it.

Logarithmic time is represented as?

O(log(n))

Algorithms taking logarithmic time are commonly found in operations on binary trees or when using binary search.

An O(log n) algorithm is considered highly efficient, as the ratio of the number of operations to the size of the input decreases and tends to zero when n increases.

Linear time is represented as?

O(n)

the running time increases at most linearly with the size of the input.

Log-Linear time is represented as?

O(nlog(n))

gives us a means of notating the rate of growth of an algorithm that performs better than O(n2) but not as well as O(n).

Quadratic time is represented as?

O(n2)

represents an algorithm whose performance is directly proportional to the squared size of the input data set (think of Linear, but squared). … In doing so, we are passing over the same array twice with each search.

Cubic time is represented as?

O(n3)

An algorithm is said to run in cubic time if the running time of the three loops is proportional to the cube of N. When N triples, the running time increases by N * N * N. Time Complexity of a loop is said as O(log N) if the loop variables is divided / multiplied by a constant amount.

Exponential time is represented as?

O(2n)

denotes an algorithm whose growth doubles with each additon to the input data set. If you know of other exponential growth patterns, this works in much the same way. The time complexity starts off very shallow, rising at an ever-increasing rate until the end.

Factorial time is represented as?

O(n!)

True or False

In terms on Big O Notation, it’s always in the context of the worst case senario?

True

Big O defines addresses when a function performs it’s worst in time complexity, when sh!t goes sideways!

In Big O Notation, which has a better time complexity? O(n) or O(1)

O(1) constant time

In Big O Notation, which has a better time complexity? O(n) or O(log n)

O(log n) logarithmic time

In Big O Notation, which has a better time complexity? O(n3) or O(n!)

O(n3) cubic time