Computer Vision Flashcards

(125 cards)

Perspective projection

- y / Y = f / Z -> y = fY/Z

- Similarly, x = fX/Z

- (X,Y,Z) -> (x,y): R³ -> R²

Coordinate conversion

- Cartesian to homogenous

1) P = (x,y) -> (x,y,1)

2) P ∈ R² -> P̃ ∈ P² - Homogenous to cartesian

1) P̃ = (x̃, ỹ, z̃) -> P = (x,y)

Sidenote: x = x̃/z, y = ỹ/z

Perspective projection equation

2D point = [x̃;ỹ;z̃]

Projection matrix = [f 0 0 0; 0 f 0 0; 0 0 1 0]

3D point: [X; Y; Z; 1]

- 2D point = projection matrix * 3D point

- x̃ = fX, ỹ = fY, z̃ = Z

- To convert back to cartesian, divide by third coordinate (i.e., x = fX/Z, y = fY/Z)

Intrisic model

- An upgrade from the simple projection model

- Includes the following properties:

1) Change of coordinate meters to pixels

2) Focal lengths are independent for x and y (defined as fₓ and fᵧ)

3) A skew , s , is included

4) Optical centre (cₓ, cᵧ) is included

Equation for intrisic model

[fₓ s cₓ 0; 0 fᵧ cᵧ 0; 0 0 1 0] = [1/pₓ 1/pₛ cₓ; 0 1/pᵧ cᵧ; 0 0 1] * [f 0 0 0; 0 f 0 0; 0 0 1 0]

Extrinsic model

- Also known as the pose

- Consists of a 3x1 3D-Translation vector t

- Consists of a 3x3 3D-Rotation matrix R

Overall camera matrix

- Considers both intrinsic and extrinsic calibration parameters together

- [x̃;ỹ;z̃] = [fₓ s cₓ; 0 fᵧ cᵧ; 0 0 1]*[R3D t]*[X;Y;Z;1]

Lens cameras

- Larger aperture = more light = less fous

- Less shutter speed = less exposure time = less amount of light reaching the sensor

Focal length

- Equation: 1/f = 1/zᵢ + 1/z₀

- f = focal length

- z₀ = where object is

- zᵢ = where image is formed

A/D

- Analogue to Digital conversion

- Spatially-continuous image is sampled (CCD or CMOS) by a few pixels

- Pixel values are then quantized e.g. 0 (black) to 255 (white)

Shannon Sampling Theorem

- Used to choose sampling resolution

- If signal is bandlimited, then fₛ ≥ 2 * fₘₐₓ, where:

1) fₛ = sampling (aka Nyquist) rate

2) fₘₐₓ = maximum signal frequency - This equation is enough to guarantee that the signal can be perfectly linearly reconstructed from its samples

- If signal is not bandlimited, then use analogue low-pass filter to clip frequency contents fᵤₜ ≤ fₛ/2

Quantization

- Used to determine bit rate

- In audio, this would be the number of bits sent per second (CD’s are usually 16-bit)

- In imaging, this would be in terms of coding the value of pixels (Images are usually 24-bit)

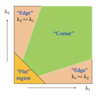

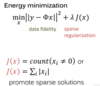

Compressed sensing

Steps

1) Regular sampling (namely Shannon)

2) Random sampling

- Used to collect a subset

- Complexity: M = O (K log (N/K))

3) Reconstruction

- Obtain x from y

4) Sparse approximation

- Is typically a non-linear reconstruction

- Should have a prior awareness

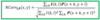

- Equation for prior awareness:

Compressed sensing hallmarks

- Stable

1) Acquisition and recovery is numerically stable - Assymetrical

- Democratic

1) Each measurement carries the same amount of information - Encrypted

1) Random measurements are encrypted - Universal

1) Same random patterns can be used to sense any sparse digital class

Convolution steps

1) Flip filter vertically and horizontally

2) Pad image with zeros

3) Slide flipped filter over the image and compute weighted sum

Convolution distributive property

- Consider an image I

- Consider two filters g and h

- Image I is convolved by filters g and h and the results are added together (i.e. Î = (I * g) + (I * h))

- Distributive property states that ((I * g) + (I * h)) ≡ (I * (g + h))

Correlation

- No flipping of kernel

- No padding image

- Just slide filter over the image and compute weighted sum

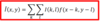

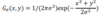

Normalized correlation

-Used to measure similarity between a patch and image at location (x,y)

SSD

- Stands for Sum of Squared Differences

- Calculates the moving difference between the intensities of a patch and an image

- Given a template image/patch, fins where it is located in another image by finding displacement (x*,y*) which minimises SSD cost

Low-pass filter

- Smooths (blurs) an image by (weighted/ unweighted) averaging pixels in its neighborhood

- Also known as a Gaussian filter

High-pass filter

- Computes finite (weighted/unweighted) differences between pixels and their neighbors

- Extracts important low-level information: intensity variations

- HP filters have zero mean and remove DC component from images

- Example of HP filter is edge detection

- Horizontal edge: [-1 -1; 1 1]

- Vertical edge: [-1 1; -1 1]

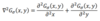

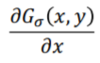

Derivative of Gaussian

- A high-pass filter

- Used to implement nth order image differences

- For example, with a second order derivative

- (∂2I)/(∂2x) ≈ f1∗f1∗I

- (∂2I)/(∂2y) ≈ f2∗f2∗I

- For example, with a second order derivative

Laplacian of Gaussian filter

- A high-pass filter

- Used to implement 2nd order image differences

- In the equation, ∇² represents the Laplacian operator

- ∇²f = (∂²f / ∂x²) + (∂²f / ∂y²)