Ch 2 - Signal Detection and Absolute Judgement Flashcards

(19 cards)

Signal detection theory

hits, misses, false alarm, and correct rejection

Beta

ratio of neural activity produced by signal and noise at XC – intersection of noise and signal curves (critical threshold where operator decides “yes”)

If signal and noise occur equally,

beta (β) = 1

If signal and noise DO NOT occur equally

XC should be moved left or right accordingly (βOPT)

Optimum beta βOPT

where beta SHOULD be set based on the probability of signal and noise

Optimum beta

ratio of probability of no signal to probability of signal, expressed as value of correct rejection and hits to costs of false alarms and missies (13)

Optimum beta

- Increase in numerator – high beta (value of correct rejection and cost of false alarms)

Optimum beta

- Increase in denominator – low beta (value of hits and cost of misses) should lead to more liberal responding

Sensitivity

- can help determine if misses come from high beta (conservativeness) or low beta (liberal responding)

- Higher d’, the more sensitive the receiver is

ROC Curve

- receiver operating characteristics – joint influence of sensitivity and response bias

- Uses P(H) and P(FA) as axis, as P(M) and P(CR) can be inferred by 1-P(H) and 1-P(CR)

Fuzzy Signal detection theory

- in previous example, it is clear what is signal and what is noise, but this is not always possible in practice; sometimes the determination is fuzzy

- Event in fuzzy SDT can belong to set signal (S) with some degree between 0 and 1

- Or can belong to set response (r) between 0 and 1

- Result of number of hits, misses, FAs, and CRs can then be computed

- Example on page 19-20

- Fuzzy hit rate = sum of all event H values/sum of membership values of signal S

- FA rate = sum of FA values/sum of membership values of noise (1-s)

- Once hit and FA rates computed, measures of sensitivity and bias can be calculated just as in conventional SDT

- Applications of signal detection theory

- Medical diagnosis – making yes/no decision of whether an abnormality is present based on factors such as salience of abnormality, number of converging symptoms, and training of doctor

- Alarm and alarm systems – most have low beta threshold because the cost of misses is greater than the cost of false alarms

- Recognition/memory & eye witness testimony (22)

- Vigilance paradigm – common application of SDT (25)

- Experiments have showed that vigilance lever is lower than desirable and declines steeply during first half hour of watch

- Measuring vigilance performance – based on factors that influence (increase of decrease) sensitivity and bias – listed on 26

- Arousal theory vs. sustained demand theory vs. expectancy theory (miss one signal and there is a perceived larger gap between signals, more likely to miss more)

- Techniques to combat loss of vigilance (28)

- Absolute judgement (33)

- HS = amount of info in the stimulus – base 2 log [e.g. - log2] of the number of possible events

- Example log2 (4) = 2, log2 (16) = 4

- HT = amount transmitted to the response, which is between 0 and HS

- HS – HT = info loss (HLOSS)

- Information theory – (41)

- Number of alternatives, N

- HS = log2(N)

- Probability: HS = log2(1/Pi)

- Average information conveyed by a series of events with differing probabilities that occur over time – like a series of warning lights on a panel or a series of communication commands

- HAVE = – example page 43

- HT Calculation example page 44

- Stimulus Response Matrix (45) Figure 2S.2

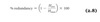

% redundancy

bandwidth

How rapidly info is transmitted